Influencing Behavioral Attributions to Robot Motion During Task Execution

Nick Walker, Christoforos Mavrogiannis, Siddhartha Srinivasa, Maya Cakmak

University of Washington

We used a 2D robot vacuum cleaning domain as a testbed for behavioral attributions.

You know how to make your robot do a task (well, you know how to make it try). What do people think about your robot though? Do they think it's competent? Do they think it's curious? Could you influence the kinds of behavioral attributions they make to the robot by changing how it does its task?

This paper gives a framework for thinking about behavioral attributions, and a recipe you can follow for baking sensitivity to these attributions into a robot's task execution. Let's get cooking.

Step 1: Figure out what the attributions are

If you want to influence attributions, you've got to decide on the attributions that matter for your robot. There's a chance you already know these. If people keep telling you that your robot scares them, or maybe that it's nosy, that's a good place to start. Try to set them up to minimize measurement error down the line; you want to be able to ask a lot of people to give their attributions, and you want their responses to correspond to separable, freestanding character judgements. What you don't want is to just ask people "was the robot scary?" because then you'll have one person say "yes, it seemed so smart I was afraid it was going to take my job" and another say "yes, that thing's dumb as bricks and it was swinging way too close to me." If multiple concepts get lumped together, you won't be able to repeatably sample people and get consistent readings on scariness for a given trajectory.

We recommend you follow some simple best practices (taken from the psychology world) here. Build a bank of adjectives, then collect agreement or disagreement with each adjective for a given trajectory. Run a factor analysis to help you disentangle the responses. At the end, you'll have a bank of questions and a way to combine people's agreement scores on multiple items into standardized scores reflecting some underlying factors. These factor scores will be your attributions.

Step 2: Build your attribution prediction model

Our recipe for getting a model to predict behavioral attributions to a robot's trajectories.

So you have your attributions, and you know how to collect them for arbitrary trajectories. We want to be able to predict these attributions. You can pick whatever model you want, but know that attributions are fickle things. Even if you've done a top-notch job setting up your questions, attributions are inherently subjective and noisy. There is no one-true-attribution for a trajectory, but there's a distribution of attributions people will give.

You'll need a dataset of trajectories. Maybe you're Waymo and you have that kind of data just lying around, but otherwise you'll want to generate a big enough set of trajectories to cover your attribution space. Feel free to make or collect demonstrations to bootstrap here.

Once you've collected user responses, we suggest you use something like a mixture density network to predict the distribution of attributions for a given trajectory. We worked our example in the low-data regime (because we're not Waymo), so we had to juice the learning process a bit by crafting a feature representation of the trajectories. This wasn't that hard; we asked people what made them pick their responses and then turned the common responses into features.

Step 3: Use the model to optimize trajectories

We suggest that you use a task cost ceiling when optimizing.

You can use your model for a bunch of purposes, but we were interested in making our robot elicit the attribution _while doing a task_. Some attributions are at odds with task performance, so it's not obvious how to balance the two. We suggest that you set a cost ceiling, and optimize trajectories to elicit the attribution below this ceiling.

The details of the optimization will change from robot to robot. We implemented a trajectory optimization scheme tailored for our virtual robot vacuum cleaning domain. We generated an optimal coverage plan via conventional planning methods, then used the model to rank adjacent trajectories, searching for a modified trajectory that the model thought would get the closest to the desired attribution.

How well does it work?

Pretty well. The models we produced for our 2D vacuum cleaning domain are competent attribution predictors in a corpus evaluation, and when used to generate trajectories we see clear qualitative "styles" emerge.

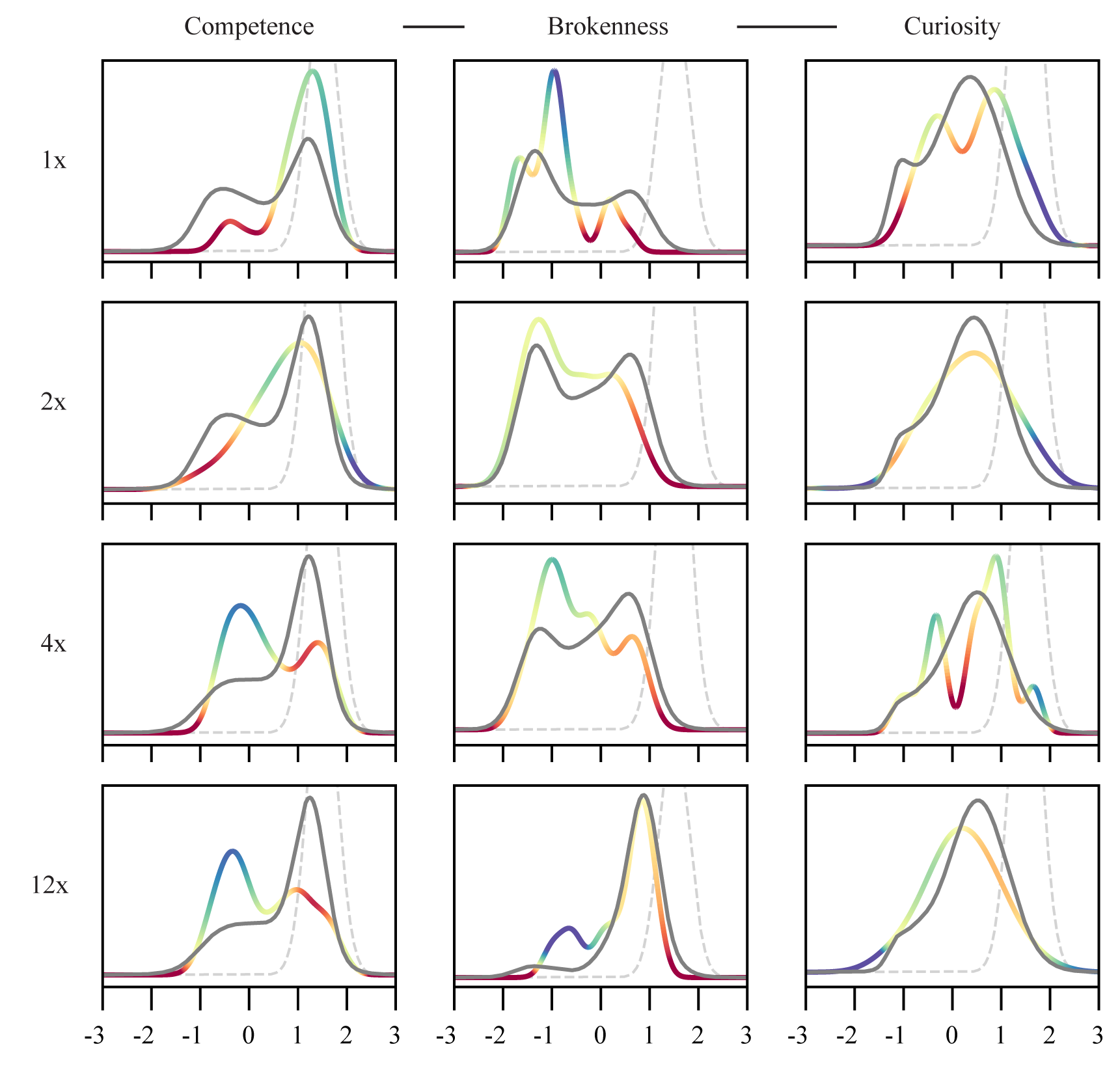

| Experiment I | |||

| Competence | Brokenness | Curiosity | |

| 1x | |||

| 2x | |||

| 4x | |||

| 12x | |||

We generated trajectories for cleaning the bedroom with varying cost floors (in multiples of the optimal cost). Participants saw one column (four of the videos) and provided their attributions. The empirical distribution of their scores is the multicolored line, the predicted distribution is the grey line, and the dashed line is the desired attribution distribution.

Participants that rated the generated trajectories agreed that the curiosity- and brokenness-optimized trajectories looked as intended, but they didn't think the competence-optimized trajectories looked competent. What happened there? Well, the model had learned that covering more floor space made users attribute more competence to the robot during training. But in this evaluation, participants always saw an optimal trajectory, which probably anchored their expectations.

We weren't always able to push an attribution to eleven, but participants generally agreed with which condition was the "most" or "least" "______" for each of the attributions.

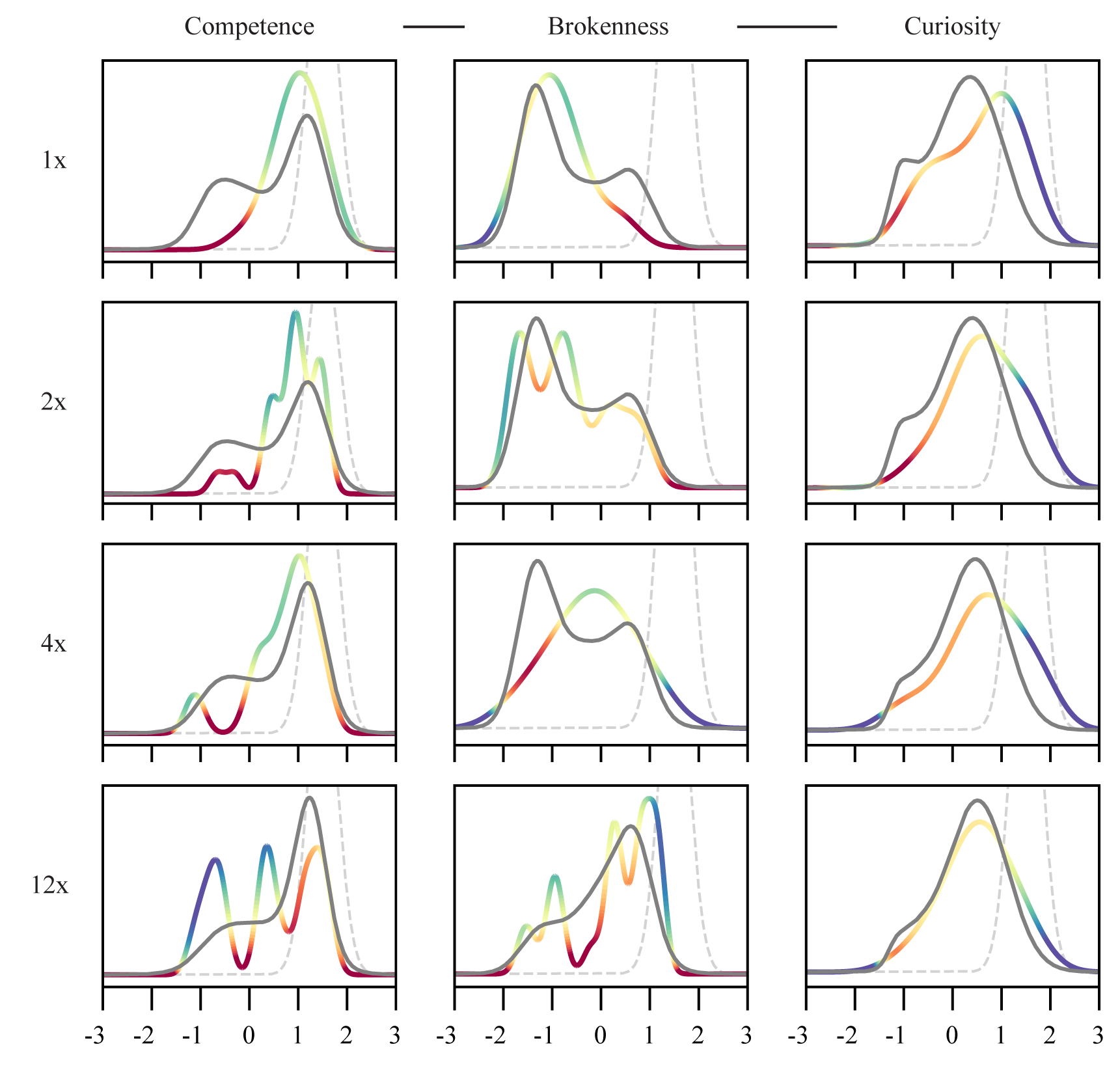

| Experiment II | |||

| Competence | Brokenness | Curiosity | |

| 1x | |||

| 2x | |||

| 4x | |||

| 12x | |||

We changed up the layout to see if we could still generate communicative trajectories, despite not having trained in the particular environment. The results were similar to the results from Experiment I.

PS: We built a cute domain

We built a discrete gridworld robot vacuum-cleaning facsimile that runs in the browser, both for playing back trajectories and for collecting demonstrations. Click the environment below to give a try! You'll need a desktop browser.

BibTeX Entry

@inproceedings{walker2021attributions,

author = {Walker, Nick and Mavrogiannis, Christoforos and Srinivasa, Siddhartha and Cakmak, Maya},

title = {Influencing Behavioral Attributions to Robot Motion During Task Execution},

booktitle = {Conference on Robot Learning (CoRL)},

location = {London, UK},

month = nov,

year = {2021},

wwwtype = {conference},

wwwpdf = {https://openreview.net/pdf?id=UIaodSPHNFN}

}